Near real-time burnt area mapping using the DEA datacube

Sign up to the DEA Sandbox to run this notebook interactively from a browser

Compatibility: Notebook currently compatible with the DEA Sandbox environment only

Products used: ga_s2am_ard_3, ga_s2bm_ard_3, ga_s2bm_ard_3, ga_ls8c_ard_3, ga_ls9c_ard_3

IMPORTANT: This notebook and the datasets visualised and produced are not to be used for safety of life decisions. For local updates and alerts, please refer to your state emergency or fire service.

Background

This notebook shows an example of how to use DEA’s near real-time freely available imagery for users tracking recent fire events. This notebook outlines a workflow to select the most suitable ‘near real-time’ and baseline imagery for comparison from the Landsat 8 and 9 and Sentinel-2A, -2B and -2C sensors. Thresholding is applied to detect unverified burnt areas, before creating an exportable polygon vector file.

Note: It can take up to 48 hours after an image is captured by a sensor to become available for analysis in the DEA Sandbox Environment.

What does ‘Near Real-time’ Burnt Area Mapping mean?

“Near real-time (NRT)” or “Rapid Mapping” within this context refers to satellite data/imagery from the Sentinel-2 and Landsat satellites that will be processed as soon as possible after the data is received by DEA. Under optimal circumstances, DEA aims to make imagery available within hours of acquiring source images from data providers. To support faster availability of data, not all the corrections used in normal imagery processing will be applied. The imagery made available in this way may be of a lesser quality than otherwise achievable after DEA’s established processing workflow, but they will be available much sooner. The processing is often complete within a few hours, meaning the imagery may be available on the day of capture.

Although DEA makes every effort to make imagery available ASAP, unforeseen challenges may cause delays in image availability.

When to use this notebook

This notebook is designed to map the extent of fires within Australia over the previous fortnight dependent on the availability of suitable imagery. The output of this notebook does not measure the severity of fires.

For users interested in mapping historical fires, please use the Burnt Area mapping using Sentinel-2 data notebook instead.

Normalised Burn Ratio (NBR) and delta Normalised Burn Ratio (dNBR)

The Normalised Burn Ratio (NBR) is a Fire Severity Index (FSI) that uses the differences in the way healthy green vegetation and burnt vegetation reflect light to detect burnt pixels in multi-spectral imagery. The NBR index requires signals from the NIR (Near Infrared) and SWIR (Short-wave Infrared) parts of the electromagnetic spectrum and is defined below.

Comparing the most recent NBR values to a baseline or past NBR (i.e. dNBR) can assist in removing noise and isolating environmental change in an EO workflow. The change in NBR is called the delta NBR (dNBR) and is defined as:

More information on NBR and dNBR can be found in the Burnt Area mapping using Sentinel-2 data notebook.

Relativized Burn Ratio (RBR)

The Relativized Burn Ratio (RBR) is a variation of the Relativized delta Normalised Burn Ratio (RdNBR) developed by SA parks that solves some of the numerical problems with the original RdNBR algorithm. Like the RdNBR, the RBR aims to improve burnt area mapping over burnt areas that had low levels of pre-fire vegetation by considering the baseline NBR measurement to prevent these areas being thresholded out.

Later on in this analysis, you may wish to use the RBR index instead of the dNBR index if your Area of Interest (AOI) has considerable amounts of burnt area that originally had low vegetation levels. For example, the RBR may work better mapping fires over AOI’s with both barren grasslands and dense canopies then the dNBR method.

Description

This notebook contains the following steps:

Define suitable date ranges for the baseline and Near Real-time Images

Calculate NBR and dNBR dataarray’s and perform optional post-processing

1. Getting started and defining an Area of Interest (AOI)

To run this analysis, run all the cells in the notebook, starting with the “Load packages” cell.

Load packages

Import Python packages that are used for the analysis.

[1]:

import math

import sys

from datetime import datetime, timedelta

import datacube

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import xarray as xr

from datacube.utils import cog

from scipy import ndimage

from skimage import morphology

sys.path.insert(1, "../Tools/")

from dea_tools.bandindices import calculate_indices

from dea_tools.datahandling import load_ard

from dea_tools.plotting import display_map, rgb, plot_variable_images

from dea_tools.spatial import xr_rasterize, xr_vectorize

Connect to the datacube

Connect to the datacube so we can access DEA data. The app parameter is a unique name for the analysis which is based on the notebook file name.

[2]:

dc = datacube.Datacube(app="Burnt_area_mapping_near_realtime")

Select location

The selected latitude and longitude will be displayed as a red box on the map below the next cell. This map can be used to find coordinates of other places, simply scroll and click on any point on the map to display the latitude and longitude of that location.

NB: Selecting an excessively large AOI may exceed the amount of available memory in the Sandbox environment causing the notebook to fail. If this occurs, try reducing the size of the AOI, or increasing the values of the min_gooddata variables to reduce the number of images loaded.

[3]:

# Set the central latitude and longitude

central_lat = -32.9290

central_lon = 149.5118

# Set the buffer to load around the central coordinates.

buffer = 0.125

# Set a name for the AOI (used to name exported products)

area_name = "Tambaroora_NSW"

# Compute the bounding box for the study area

study_area_lat = (central_lat - buffer, central_lat + buffer)

study_area_lon = (central_lon - buffer, central_lon + buffer)

#Display the AOI on an interactive map

display_map(x=study_area_lon, y=study_area_lat, margin=-0.2)

[3]:

2. Define suitable date ranges for the baseline and Near Real-time Images

By default, images from the previous 14 days from the current date are extracted from the datacube and visualised for manual selection. If a different NRT date range is desired, enter this for the nrt_time_delta variable in DD format.

For the selection of a baseline image, the default is to extract and visualise imagery that was acquired within the period extending between 14 days to two months prior to the current date. If a different date range is preferred, adjust the baseline_time_delta below in DD format.

The date ranges calculated below are a good starting point, but your chosen AOI may require larger or smaller date ranges.

For the purpose of demonstrating the capability of this notebook during a fire event, a static date has been entered for the ``nrt_date_end`` variable. To run this notebook for a current fire, please instead run the second line of code noted below to set ``nrt_date_end`` to the current date

[4]:

# Near Real-time event date. '2023-03-20' is used to demonstrate the notebooks capabilities.

# For use mapping a current event, please move the '#' from the second to the first line below.

nrt_date_end = "2023-03-20"

#nrt_date_end = datetime.today().strftime("%Y-%m-%d")

[5]:

# Define the date ranges for NRT and baseline imagery requests. The default is the preceding 14 days for

# nrt imagery, then the two months prior to that for baseline imagery.

nrt_time_delta = 14

baseline_time_delta = 42

# Calculate the beginning of the 'nrt' date range.

nrt_date_start = datetime.strftime(

((datetime.strptime(nrt_date_end, "%Y-%m-%d")) - timedelta(days=nrt_time_delta)),

"%Y-%m-%d",

)

# Date range from which to extract and visualise potential baseline images. Can be manually changed by entering a date in 'YYYY-MM-DD' format.

baseline_start = datetime.strftime(

(

(datetime.strptime(nrt_date_start, "%Y-%m-%d"))

- timedelta(days=baseline_time_delta)

),

"%Y-%m-%d",

)

baseline_end = datetime.strftime(

((datetime.strptime(nrt_date_end, "%Y-%m-%d")) - timedelta(days=nrt_time_delta)),

"%Y-%m-%d",

)

# Print Date ranges

print(

f"Potential Near Real-time Images will be extracted between {nrt_date_start} and {nrt_date_end}"

)

print(

f"Potential baseline Images will be extracted between {baseline_start} and {baseline_end}"

)

Potential Near Real-time Images will be extracted between 2023-03-06 and 2023-03-20

Potential baseline Images will be extracted between 2023-01-23 and 2023-03-06

3. Load, Visualise, and Select a Near Real-time image

The availability of cloud-free and recent imagery is the largest limiting factor in undertaking Burn Area Mapping in near real-time while a fire event occurs. Therefore, we will look at recent images from both the Sentinel-2 and Landsat imagery collections to maximise the change of finding a suitable image.

To reduce the impact of cross-sensor influence on our analysis, we will only load baseline imagery from the sensor from which our near real-time image is selected.

Define Load Parameters

NB: The min_gooddata_nrt and min_gooddata_base variables are used to filter out poor-quality images for the chosen AOI in the Near Real-time and Baseline Imagery extracts respectively. The values assigned to these variables set the threshold for the number of ‘good’ (i.e. cloud free) pixels required for the image to be extracted.

A min_gooddata value of 0.9 will ensure that only images with over 90% cloud free pixels over the AOI are extracted. To increase the number of images extracted, these values can be reduced to allow images with more cloudy pixels to be extracted.

[6]:

# Set spatial, spectral, and quality parameters for the imagery extracted from the datacube.

# Setting the min_gooddata value lower for the near real-time extract increases the number of images to choose from

min_gooddata_nrt = 0.6

min_gooddata_base = 0.8

# Different measurements are required for each sensor due to changes in band nomenclature

measurements_s2 = [

"nbart_blue",

"nbart_green",

"nbart_red",

"nbart_nir_1",

"nbart_swir_3",

]

measurements_ls = [

"nbart_blue",

"nbart_green",

"nbart_red",

"nbart_nir",

"nbart_swir_2",

]

# Define the resolution to be used for each sensor. The Sentinel-2 resolution can be reduced to 10m if desired.

s2_res = (-20, 20)

ls_res = (-30, 30)

# Define the coordinate system

output_crs = "EPSG:3577"

# Create a query object for the universal parameters

query = {

"x": study_area_lon,

"y": study_area_lat,

"output_crs": output_crs,

"group_by": "solar_day",

}

Load images from the Sentinel-2 collection

[7]:

# Load imagery from the Sentinel-2 Collection. Note the s2cloudless mask is applied instead of the standard fmask.

nrt_s2 = load_ard(

dc=dc,

products=["ga_s2am_ard_3", "ga_s2bm_ard_3", "ga_s2cm_ard_3"],

time=(nrt_date_start, nrt_date_end),

measurements=measurements_s2,

min_gooddata=min_gooddata_nrt,

resolution=s2_res,

cloud_mask="s2cloudless",

**query

)

#Assign a custom attribute 'sensor' to the Sentinel-2 dataset for plotting purposes.

nrt_s2 = nrt_s2.assign_attrs({"sensor":"s2"})

Finding datasets

ga_s2am_ard_3

ga_s2bm_ard_3

ga_s2cm_ard_3

Counting good quality pixels for each time step using s2cloudless

Filtering to 5 out of 6 time steps with at least 60.0% good quality pixels

Applying s2cloudless pixel quality/cloud mask

Loading 5 time steps

Load images from the Landsat collection

[8]:

# Load imagery from the Landsat Collection.

nrt_ls = load_ard(

dc=dc,

products=["ga_ls8c_ard_3", "ga_ls9c_ard_3"],

time=(nrt_date_start, nrt_date_end),

measurements=measurements_ls,

min_gooddata=min_gooddata_nrt,

resolution=ls_res,

**query

)

#Assign a custom attribute 'sensor' to the Landsat dataset for plotting purposes.

nrt_ls = nrt_ls.assign_attrs({"sensor":"ls"})

Finding datasets

ga_ls8c_ard_3

ga_ls9c_ard_3

Counting good quality pixels for each time step using fmask

Filtering to 0 out of 3 time steps with at least 60.0% good quality pixels

Applying fmask pixel quality/cloud mask

Loading 0 time steps

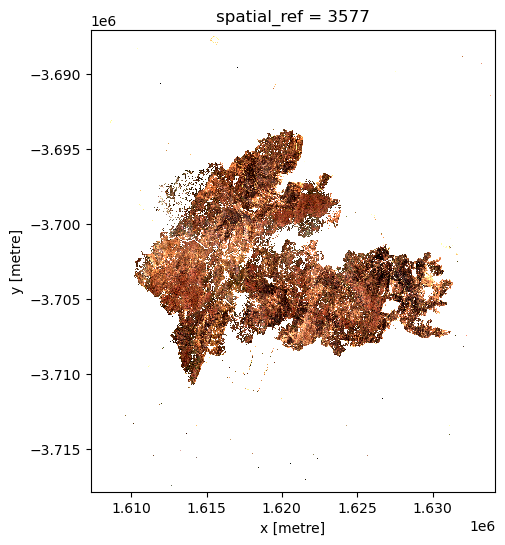

Near Real-time Image Visualisation

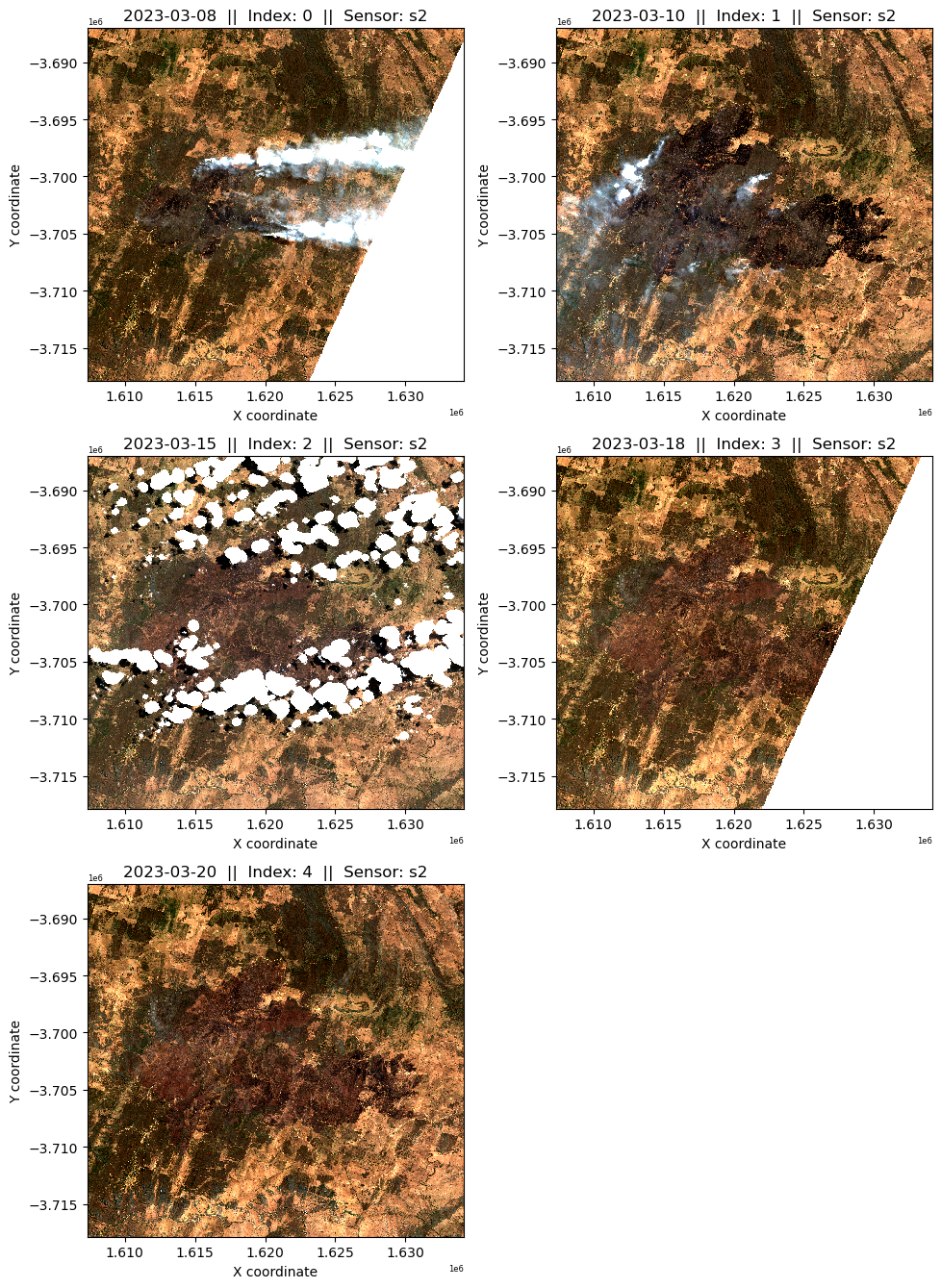

Images from both the Sentinel-2 and Landsat collections that met the spatial, temporal, and quality requirements we previously defined will be visualised below. Make note of the date, index number and sensor of each image located in the subplot title.

In the next step, we will need to set the nrt_img_index and nrt_sensor variables to the chosen images index and sensor respectively.

It is not uncommon for no suitable Landsat images to have been acquired that meet the near real-time requirements in the previous 14 days. If this occurs, the notebook will continue to run without issue.

[9]:

#Plot imagery from the Sentinel-2 satellites by running the `plot_variable_images` tool on the `nrt_s2` xarray dataset.

plot_variable_images(nrt_s2)

#Plot suitable imagery from the Landsat satellites by running the `plot_variable_images` tool on the `nrt_ls` xarray dataset.

if nrt_ls.time.size == 0:

print('No suitable Landsat imagery')

else:

plot_variable_images(nrt_ls)

/home/jovyan/development/dea-notebooks/Real_world_examples/../Tools/dea_tools/plotting.py:898: FutureWarning: The return type of `Dataset.dims` will be changed to return a set of dimension names in future, in order to be more consistent with `DataArray.dims`. To access a mapping from dimension names to lengths, please use `Dataset.sizes`.

plot_count = img_collection.dims["time"]

No suitable Landsat imagery

Near Real-time Image Selection

From the above images, note the index value of the most appropriate scene and set the nrt_img_index variable below to that value. For example, if the first image displayed is the most desirable, set nrt_img_index = 0.

Additionally, set the rt_sensor variable to the sensor that the chosen near real-time image originated from, s2 for Sentinel-2 or ls for Landsat. Only imagery from the selected collection will be displayed for the baseline image visualisaion below to reduce cross-sensor influence on the analysis.

[10]:

# Index selection for the Near Real-time image chosen above. The default value of -1 selects the most recent image regardless of suitability.

nrt_img_index = -1

# Set the below variable to either 's2' or 'ls' depending on the sensor your chosen image is from.

nrt_sensor = "s2"

# Assign the selected image to the rt_img variable, and identify which sensor and measurements to use to find a baseline image.

if nrt_sensor == "s2":

nrt_img = nrt_s2.isel(time=nrt_img_index)

baseline_products = ["ga_s2am_ard_3", "ga_s2bm_ard_3", "ga_s2cm_ard_3"]

baseline_measurements = measurements_s2

index_collection = "ga_s2_3"

baseline_res = s2_res

baseline_cloud_mask = "s2cloudless"

elif nrt_sensor == "ls":

nrt_img = nrt_ls.isel(time=nrt_img_index)

baseline_products = ["ga_ls8c_ard_3", "ga_ls9c_ard_3"]

baseline_measurements = measurements_ls

index_collection = "ga_ls_3"

baseline_res = ls_res

baseline_cloud_mask = "fmask"

else:

print(

"Please make sure the 'nrt_sensor' variable is correctly set to either s2 or ls"

)

4. Load and Select baseline Imagery

[11]:

# Load imagery from whichever collection the NRT image was selected from.

baseline = load_ard(

dc=dc,

products=baseline_products,

time=(baseline_start, baseline_end),

measurements=baseline_measurements,

min_gooddata=min_gooddata_base,

resolution=baseline_res,

cloud_mask=baseline_cloud_mask,

**query

)

#Assign a custom attribute 'sensor' to the baseline dataset for plotting purposes.

baseline = baseline.assign_attrs({"sensor": nrt_sensor})

Finding datasets

ga_s2am_ard_3

ga_s2bm_ard_3

ga_s2cm_ard_3

Counting good quality pixels for each time step using s2cloudless

Filtering to 3 out of 17 time steps with at least 80.0% good quality pixels

Applying s2cloudless pixel quality/cloud mask

Loading 3 time steps

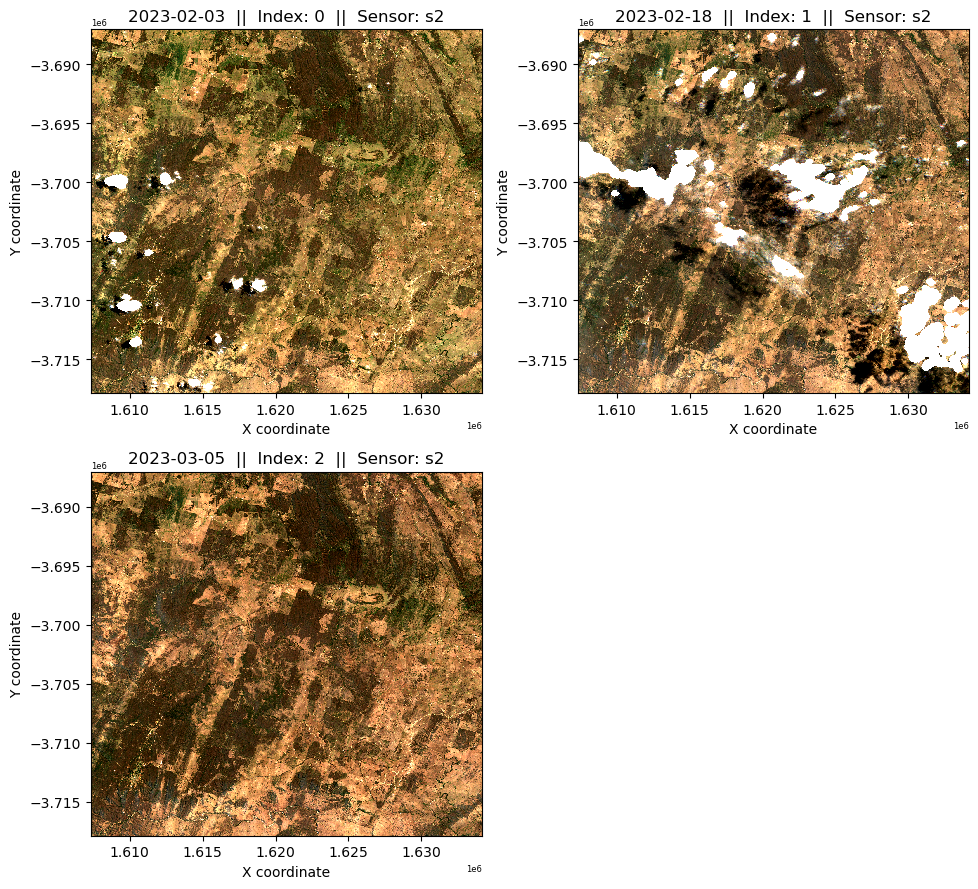

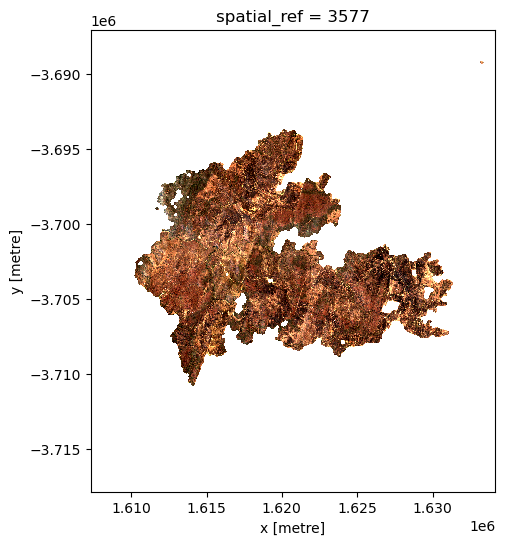

Visualise the extracted baseline imagery

[12]:

#Plot imagery from the baseline dataset by running the `plot_variable_images` tool on the `baseline` xarray dataset.

plot_variable_images(baseline)

/home/jovyan/development/dea-notebooks/Real_world_examples/../Tools/dea_tools/plotting.py:898: FutureWarning: The return type of `Dataset.dims` will be changed to return a set of dimension names in future, in order to be more consistent with `DataArray.dims`. To access a mapping from dimension names to lengths, please use `Dataset.sizes`.

plot_count = img_collection.dims["time"]

Baseline Image Selection

A baseline image from the above selection must be chosen. It is important to remember that the dNBR index measured environmental changes associated with fire induced landscape change. Therefore, a scene similar to the near real-time image that is free from fire affects will provide the best comparison point to detect burn scars. For example, if the landscape in the near real-time image is dry, it is better to choose a baseline image where the landscape has a similar level of dryness compared to a greener image.

Note the index number of the baseline image chosen above, then set it to the baseline_img_index variable.

[13]:

# Set the baseline_img_index variable to the index of the chosen baseline image

baseline_img_index = 2

# Extract the chosen baseline image to the baseline_img variable

baseline_img = baseline.isel(time=baseline_img_index)

5. Calculate NBR and dNBR dataarray’s and perform optional post-processing

[14]:

# Calculate NBR for the near real-time image and assign it to the rt_NBR variable

nrt_image_NBR = calculate_indices(

nrt_img, index="NBR", collection=index_collection, drop=False

)

nrt_NBR = nrt_image_NBR.NBR

# Calculate NBR for the baseline image and assign it to the baseline_NBR variable

baseline_image_NBR = calculate_indices(

baseline_img, index="NBR", collection=index_collection, drop=False

)

baseline_NBR = baseline_image_NBR.NBR

# Calculate dNBR (delta NBR) by differing the two indices

dNBR = baseline_NBR - nrt_NBR

[15]:

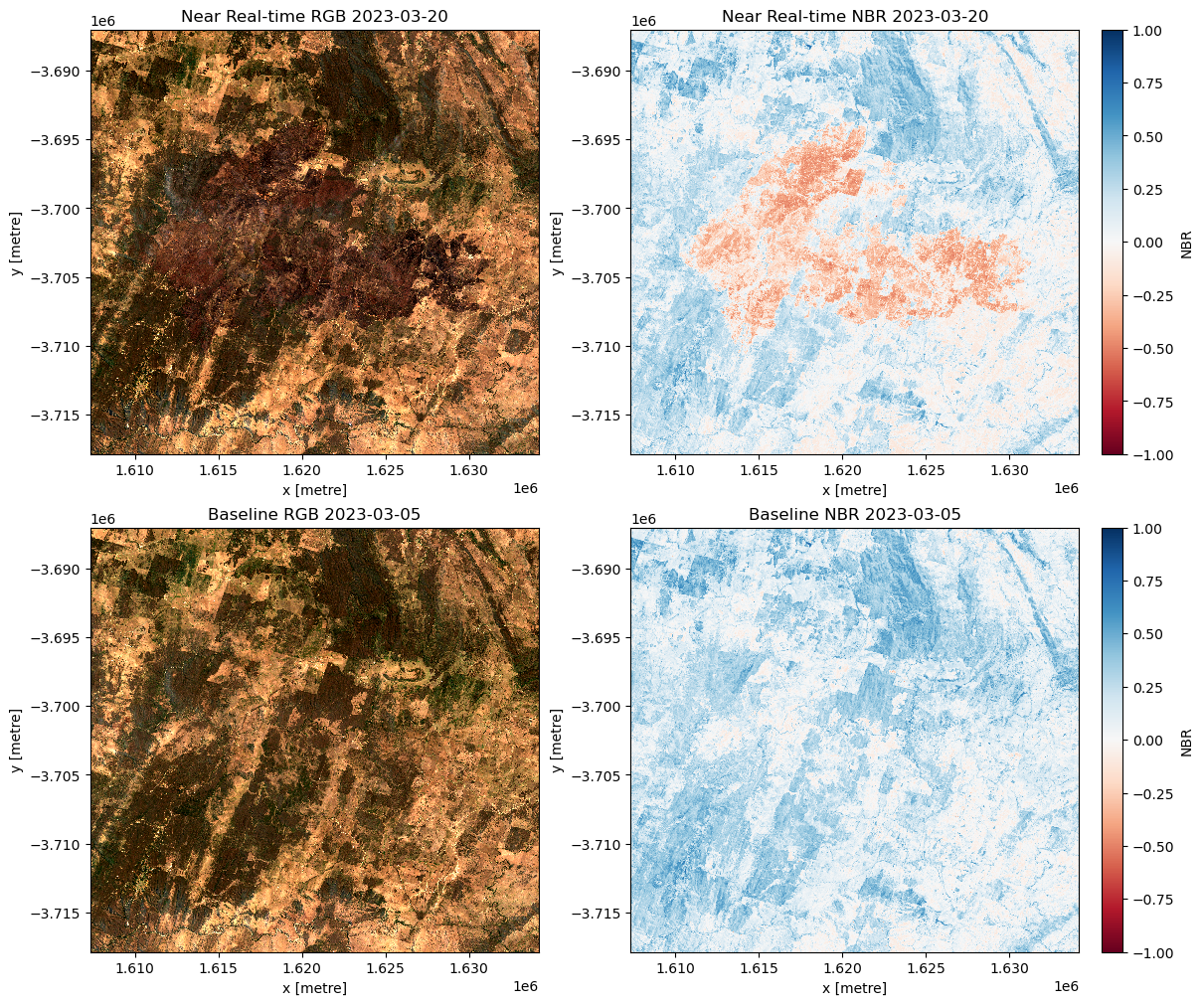

# Create a figure to visualise the selected images in true colour, as well as the NBR index.

f, axarr = plt.subplots(2, 2, figsize=(12, 10), squeeze=False, layout="constrained")

# Visualise the selected near real-time and baseline images in true colour

rgb(

nrt_img,

bands=["nbart_red", "nbart_green", "nbart_blue"],

ax=axarr[0, 0],

robust=True,

)

rgb(

baseline_img,

bands=["nbart_red", "nbart_green", "nbart_blue"],

ax=axarr[1, 0],

robust=True,

)

# Visualise the NBR index for each image

nrt_NBR.plot(cmap="RdBu", vmin=-1, vmax=1, ax=axarr[0, 1])

baseline_NBR.plot(cmap="RdBu", vmin=-1, vmax=1, ax=axarr[1, 1])

# Set subplot Titles

axarr[0, 0].set_title("Near Real-time RGB " + str(nrt_img.time.values)[:10])

axarr[0, 1].set_title("Near Real-time NBR " + str(nrt_img.time.values)[:10])

axarr[1, 0].set_title("Baseline RGB " + str(baseline_img.time.values)[:10])

axarr[1, 1].set_title("Baseline NBR " + str(baseline_img.time.values)[:10])

[15]:

Text(0.5, 1.0, 'Baseline NBR 2023-03-05')

dNBR vs RBR for Burnt Area Mapping

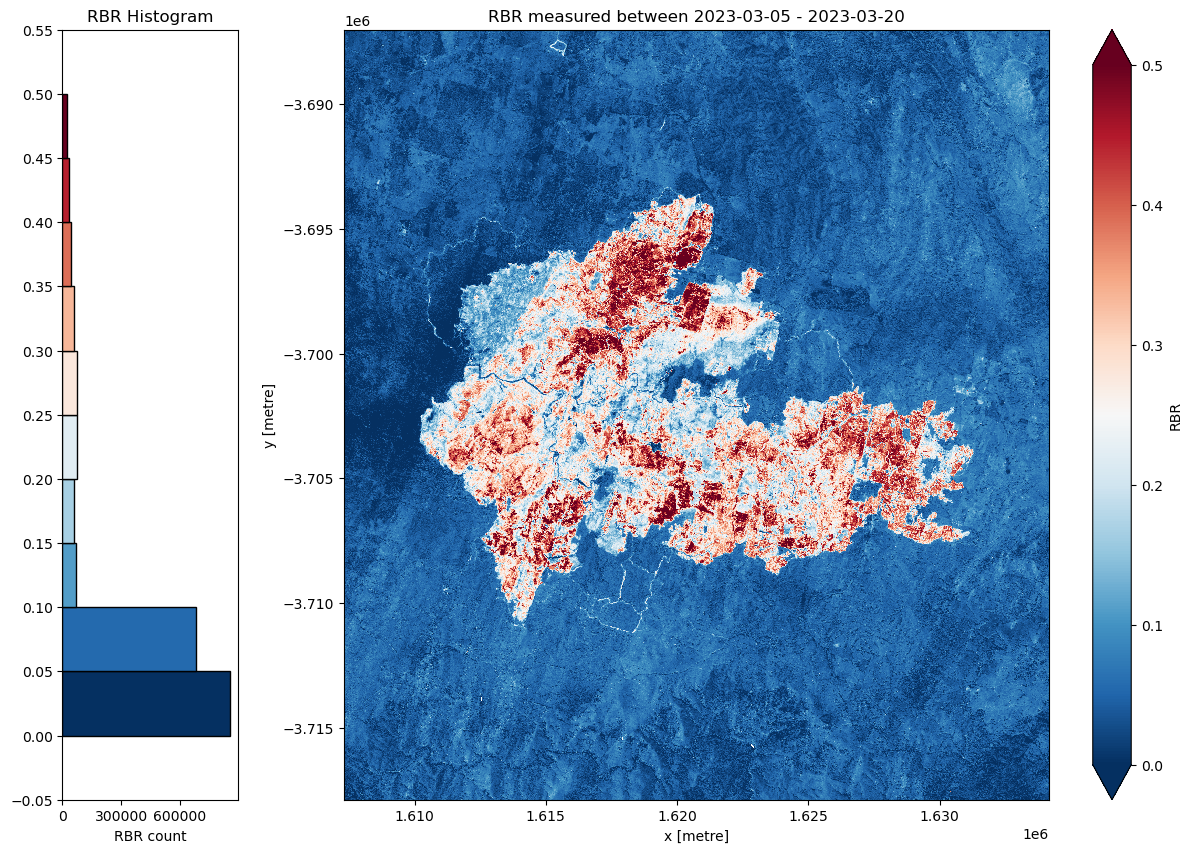

As previously discussed, two differenced fire severity indices are available in this notebook. Try using the dNBR index in the first instance. However, if the fire in your AOI occurs over less densely vegetated landscapes such as grasslands, you may wish to trial the RBR index. In this example, we have used the RBR index as the fire has occurred over substantial amounts of grass dominated areas.

Depending on your choice of Fire Severity Index, set the bam_method variable to either ‘dNBR’ or ‘RBR’.

[16]:

# set the bam_method variable to either 'dNBR' or 'RBR'.

bam_method = "RBR"

# Calculate the bam variable based on the chosen dFSI

if bam_method == "RBR":

RBR = dNBR / (baseline_NBR + 1.001)

bam = RBR

elif bam_method == "dNBR":

bam = dNBR

else:

print(

"Please make sure the 'bam_method' variable is correctly set to either 'RBR' or 'dNBR'"

)

#Rename the variable in the bam dataarray with the new dFSI

bam.name=(bam_method)

[17]:

# Create a figure to plot the chosen fire severity index

f, axarr = plt.subplots(

1, 2, figsize=(15, 10), squeeze=False, gridspec_kw={"width_ratios": [1, 5]}

)

# Calculate and round the dNBR dataarray value range to set determine the plots colourmap range

bam_NBR_min = round(float(bam.quantile(0.005)), 1)

bam_NBR_max = round(float(bam.quantile(0.995)), 1)

# PLot the dNBR dataarray on the second subplot of the above figure

bam.plot(cmap="RdBu_r", vmin=bam_NBR_min, vmax=bam_NBR_max, ax=axarr[(0, 1)])

# Plot a histogram of dNBR values in the first figure subplot.

# Calculate a colourmap from the dataarray plot by iterating through individual histogram patches

cm = plt.colormaps.get_cmap("RdBu_r")

n, bins, patches = xr.plot.hist(

darray=bam,

bins=np.arange(bam_NBR_min, bam_NBR_max + 0.05, 0.05),

align="mid",

orientation="horizontal",

ec="black",

yticks=(np.arange(bam_NBR_min - 0.05, bam_NBR_max + 0.05, step=0.05)),

ax=axarr[(0, 0)],

)

# Match the colour scale of the histogram to that used in the map plot.

bin_centers = 0.5 * (bins[:-1] + bins[1:])

col = bin_centers - min(bin_centers)

col /= max(col)

for c, p in zip(col, patches):

plt.setp(p, "facecolor", cm(c))

# Set titles for each subplot

axarr[0, 0].set_title(bam_method + " Histogram")

axarr[0, 1].set_title(

bam_method

+ " measured between "

+ str(baseline_img.time.values)[:10]

+ " - "

+ str(nrt_img.time.values)[:10]

)

# Set the x-axis label and number of x-axis ticks for the histogram plot

axarr[0, 0].set_xlabel(bam_method + " count")

axarr[0, 0].xaxis.set_major_locator(plt.MaxNLocator(3))

Setting the threshold value to identify burnt areas

A value needs to be chosen to delineate burnt and unburnt areas. This value can vary depending on the vegetation structure and environmental conditions of the area being mapped. A value of 0.3 could be used as a starting point, however it is helpful to interpret the above histogram and dNBR plot to help determine the most suitable value for your AOI. The selection of a threshold value involves compromise between a value that is so low that false returns are included, and high enough that low-severity and less vegetated burnt areas are excluded.

Set the chosen threshold value to the threshold variable below.

[18]:

# Set threshold value. Fire severity index values below this value will be masked out.

threshold = 0.15

# Apply threshold to the dNBR dataset to create the `burnt` dataset of burnt pixels

burnt = bam > threshold

# Mask real-time true colour image with the above `burnt` mask to show what has been captured as burnt area.

masked = bam.where(burnt == 1)

bands = ["nbart_red", "nbart_green", "nbart_blue"]

rgb(nrt_img.where(burnt == 1), bands=bands)

Optional: Morphological Post-Processing

The result of our analysis may have resulted in an unacceptable amount of isolated pixels and other noisy returns. We can apply morphological operations to the binary xr dataarray to remove this from the products and simplify the geometry of the output polygons. This step is optional, but can be useful when the analysis produces noisy and ‘speckled’ outputs.

Closing, Erosion, and Dilation operations are called below. Closing is a compound operation of a dilation followed by an erosion to remove small holes. Erosion shrinks the image pixels, helping to remove noisy and isolated returns. Dilatation extends image pixels to remove small holes and otherwise simplify the geometry of the derived polygons.

These operations are carried out using a disk structuring element. A default size of 3 pixels is used below, but this value can be altered to vary the degree of post-processing applied.

[19]:

# Define the size of the disk structuring element, measured in number of pixels.

# The default value is 5.

disk_size = 5

# Perform the Closing, Erosion, and Dilation operations to the burnt dataarray

dilated_data = xr.DataArray(

morphology.binary_closing(burnt, morphology.disk(disk_size)), coords=burnt.coords

)

erroded_data = xr.DataArray(

morphology.erosion(dilated_data, morphology.disk(disk_size)), coords=burnt.coords

)

dilated_data = xr.DataArray(

ndimage.binary_dilation(erroded_data, morphology.disk(disk_size)),

coords=burnt.coords,

)

# Save the results to the burnt variable

burnt = dilated_data

# Visualise the post-processed dataarray to show the final burnt area

rgb(nrt_img.where(dilated_data == 1), bands=bands)

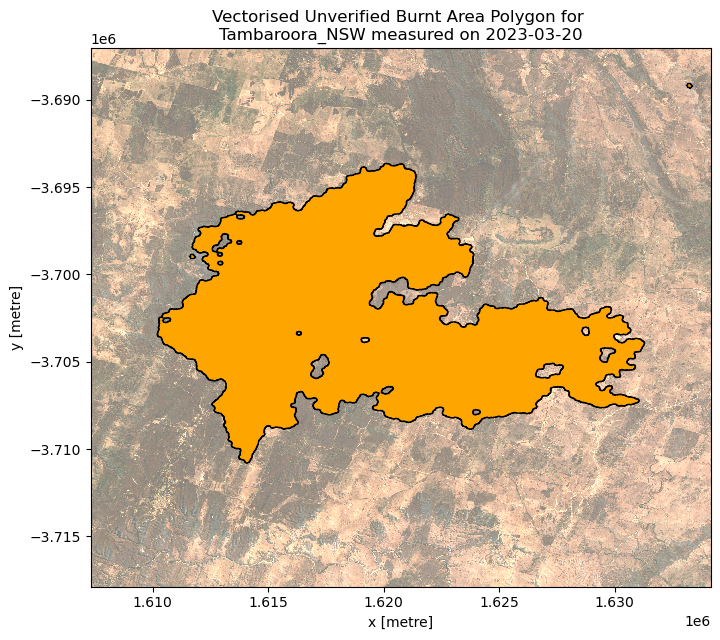

6. Convert raster data to vector and export products

Exporting Rasters

Three rasters are exported below: 1. An RGB geotiff of the Near Real-time imagery 2. An RGB geotiff of the baseline imagery 3. An unmasked single-band geotiff of the chosen delta NBR index - Those wanting to clip these images to the delineated burnt area can use the below shapefile.

[20]:

# Write near real-time fire image to multi-band GeoTIFF

cog.write_cog(

geo_im=nrt_img.to_array(),

fname=f"{area_name}_{str(nrt_img.time.values)[:10]}_near_realtime_image.tif",

overwrite=True,

)

# Write baseline reference image to multi-band GeoTIFF

cog.write_cog(

geo_im=baseline_img.to_array(),

fname=f"{area_name}_{str(baseline_img.time.values)[:10]}_baseline_image.tif",

overwrite=True,

)

# Turn delta NBR into a Xarray Dataset for export to GeoTIFF

cog.write_cog(geo_im=bam, fname=f"{area_name}_{str(nrt_img.time.values)[:10]}_{bam_method}.tif", overwrite=True)

[20]:

PosixPath('Tambaroora_NSW_2023-03-20_RBR.tif')

Converting the Raster BAM data to Vector format before exporting to shapefile

We will convert the raster dataarray into vector format using the xr_vectorize tool and export it to a shapefile. The shapefile will be visualised below.

[21]:

# Convert the burnt area from raster to vector format

gdf = xr_vectorize(

da=burnt, mask=burnt.values == 1, output_path=f"{area_name}_{str(nrt_img.time.values)[:10]}_{bam_method}.shp"

)

# Plot the vector data overlying a transparent NRT RGB Imagery

f, ax = plt.subplots(figsize=(8, 7))

gdf.plot(edgecolor="black", color="orange", ax=ax)

rgb(nrt_img, bands=["nbart_red", "nbart_green", "nbart_blue"], ax=ax, alpha=0.5)

ax.set_title(

f"Vectorised Unverified Burnt Area Polygon for \n{area_name} measured on "

+ str(nrt_img.time.values)[:10]

)

Exporting vector data to Tambaroora_NSW_2023-03-20_RBR.shp

[21]:

Text(0.5, 1.0, 'Vectorised Unverified Burnt Area Polygon for \nTambaroora_NSW measured on 2023-03-20')

Additional information

License: The code in this notebook is licensed under the Apache License, Version 2.0. Digital Earth Australia data is licensed under the Creative Commons by Attribution 4.0 license.

Contact: If you need assistance, please post a question on the Open Data Cube Discord chat or on the GIS Stack Exchange using the open-data-cube tag (you can view previously asked questions here). If you would like to report an issue with this notebook, you can file one on

GitHub.

Last modified: February 2025

Compatible datacube version:

[22]:

print(datacube.__version__)

1.8.19