Surface area duration plots from DEA Waterbodies data

Sign up to the DEA Sandbox to run this notebook interactively from a browser

Compatibility: Notebook currently compatible with both the

NCIandDEA SandboxenvironmentsProducts used: DEA Waterbodies time series data (available online)

Background

A “surface area duration” (SAD) curve depicts the cumulative percentage of time that a waterbody had a larger extent than a given percentage of its maximum extent—in other words, the total amount of time that a waterbody was at least a certain amount full. These are similar to flow duration curves, and describe the long-term behaviour of a waterbody. SAD curves may be useful for identifying different types of waterbodies, which helps us to understand how water is used throughout Australia.

Digital Earth Australia use case

The DEA Waterbodies product uses Geoscience Australia’s archive of over 30 years of Landsat satellite imagery to identify where almost 300,000 waterbodies are in the Australian landscape and tells us how the wet surface area within those waterbodies changes over time. These data can be analysed to obtain insights into the duration and temporal dynamics of inundation for any mapped waterbody in Australia.

Description

This notebook plots “surface area duration” (SAD) curves, which show for how long a waterbody is filled at a given surface area. Such a curve can be generated for any DEA Waterbody. We also generate a time-dependent version of this plot called a “short-time surface area duration” (STSAD) curve, which shows how the surface area duration curve varies over time.

This analysis should work for any time series stored in a similar format.

Getting started

Run the first cell, which loads all modules needed for this notebook. Then edit the configuration to match what you want the notebook to output.

Load modules

[1]:

%matplotlib inline

import numpy as np

import pandas as pd

import scipy.signal

from pathlib import Path

from matplotlib import pyplot as plt

import sys

sys.path.insert(1, '../Tools/')

from dea_tools.temporal import calculate_sad, calculate_stsad

Configuration

To generate a plot for a waterbody with a given geohash, specify the geohash here:

[2]:

geohash = "r3f225n9t_v2" # Weereewa/Lake George

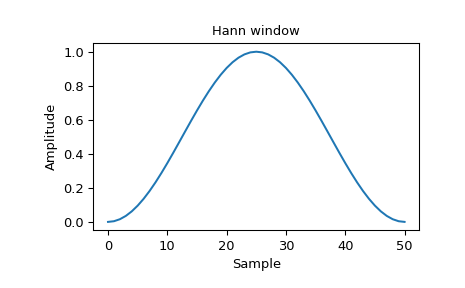

Also specify the window for the STSAD plot. Use ‘hann’ if you have no opinions on this, or ‘boxcar’ if you want a window that is easy to think about. You can see a list of possible windows here. Hann is fairly standard for short-time transforms like this and will give a smooth STSAD:

Boxcar is a sliding window:

[3]:

window = "hann"

Finally, specify the path to the waterbodies CSVs:

[4]:

waterbody_csv_path = "https://data.dea.ga.gov.au/derivative/dea_waterbodies/2-0-0/timeseries"

Load DEA Waterbodies data

The DEA Waterbodies time series are stored as CSV files. Each waterbody is labelled by a geohash, e.g. Weereewa is r3f225n9h. They are stored online (on Amazon S3) in a folder named after the first four characters of the geohash, and the filename is the geohash, e.g. Weereewa is at https://data.dea.ga.gov.au/projects/WaterBodies/timeseries/r3f2/r3f225n9h.csv. Each CSV has three columns: Observation Date, Wet pixel percentage, Wet pixel count (n = ?) where ? is the total

number of observations. An example is:

Observation Date,Wet pixel percentage,Wet pixel count (n = 230894)

1987-05-29T23:14:29Z,,

1987-07-16T23:15:29Z,,

1987-09-02T23:16:50Z,,

1987-09-18T23:17:13Z,19.9,45926

First we will read the CSV containing the surface area vs time observations data directly from the URL path using pandas. We will rename the Observation Date, Wet pixel percentage, Wet pixel count (n = ?) columns to more consistent and easier to access names:

date, pc_wet, px_wet

We also ensure that the ‘date’ column is parsed as a datetime, and convert the data percentages to decimals:

[5]:

# Set path to the CSV file

csv_path = f"{waterbody_csv_path}/{geohash[:4]}/{geohash}.csv"

# Load the data using `pandas`:

time_series = pd.read_csv(csv_path,

header=0,

names=["date", "pc_wet", "px_wet"],

parse_dates=["date"],

index_col="date",

)

# Convert percentages into a float between 0 and 1.

time_series.pc_wet /= 100

We can now inspect the first few rows of the data:

[6]:

time_series.head()

[6]:

| pc_wet | px_wet | |

|---|---|---|

| date | ||

| 1987-05-29 23:14:29+00:00 | NaN | NaN |

| 1987-05-29 23:14:29+00:00 | NaN | NaN |

| 1987-07-16 23:15:29+00:00 | NaN | NaN |

| 1987-07-16 23:15:29+00:00 | NaN | NaN |

| 1987-09-02 23:16:50+00:00 | NaN | NaN |

Interpolate data to daily values

DEA Waterbodies data is stored with one row per satellite observation. To make our data easier to analyse by time, we can use interpolation the data to estimate the percentage coverage of water for every individual day in our time series.

[7]:

# Round dates in the time series dataset to the nearest whole day

time_series = time_series.dropna()

time_series.index = time_series.index.round(freq="d")

time_series = time_series[~time_series.index.duplicated(keep ='first')]

# Create a new `datetime` index with one value for every individual day

# between the first and last DEA Waterbodies observation

dt_index = pd.date_range(start=time_series.index[0],

end=time_series.index[-1], freq="d")

# Use this new index to modify the original time series so that it

# has a row for every individual day

time_series = time_series.reindex(dt_index)

assert len(time_series) == len(dt_index)

Then interpolate (linearly, since it’s the least-information thing other than constant).

[8]:

# Replace NaNs with a linear interpolation of the time series.

time_series.pc_wet.interpolate(inplace=True, limit_direction="both")

If we inspect the first rows in the dataset again, we can see that we now have one row for every day (e.g. 1987-05-30, 1987-05-31) instead of a row for each satellite observation:

[9]:

time_series.head()

[9]:

| pc_wet | px_wet | |

|---|---|---|

| 1987-09-19 00:00:00+00:00 | 0.199500 | 31925.0 |

| 1987-09-20 00:00:00+00:00 | 0.200003 | NaN |

| 1987-09-21 00:00:00+00:00 | 0.200506 | NaN |

| 1987-09-22 00:00:00+00:00 | 0.201009 | NaN |

| 1987-09-23 00:00:00+00:00 | 0.201512 | NaN |

Calculate SADs

For each rolling time step, calculate the SADs.

[10]:

sad_y, sad_x, sads = calculate_stsad(

time_series.pc_wet, window_size=365 * 2, window=window, step=10

)

Convert the time axis into integers for calculating tick locations.

[11]:

year_starts = (time_series.index.month == 1) & (time_series.index.day == 1)

year_start_days = (time_series.index - time_series.index[0])[year_starts].days

year_start_years = time_series.index.year[year_starts]

Calculate the overall SAD.

[12]:

sad = calculate_sad(time_series.pc_wet)

Calculate the SAD for the Millenium drought.

[13]:

# Select observations between the start and end of the Millenium drought.

millenium_drought_start = pd.to_datetime('2001-01-01', utc=True)

millenium_drought_end = pd.to_datetime('2009-12-12', utc=True)

millenium_drought = (time_series.index > millenium_drought_start) & (time_series.index < millenium_drought_end)

millenium_sad = calculate_sad(time_series.pc_wet[millenium_drought])

Plot SADs

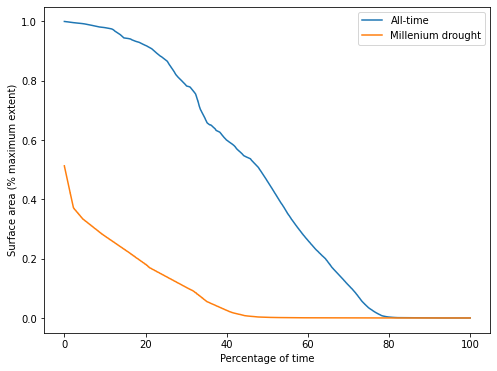

We can then plot the SAD.

[14]:

plt.figure(figsize=(8, 6))

xs = np.linspace(0, 100, len(sad))

plt.plot(xs, sad, label='All-time')

xs = np.linspace(0, 100, len(millenium_sad))

plt.plot(xs, millenium_sad, label='Millenium drought')

plt.xlabel("Percentage of time")

plt.ylabel("Surface area (% maximum extent)")

plt.legend();

From this plot of the SAD for Weereewa/Lake George, we can tell that the lake was at least partly-inundated for 25 of the last 33 years. The lake was rarely full. The slope of the curve indicates how the lake varied over time, but is hard to interpret without comparison to another SAD curve.

Comparing the all-time curve to the SAD during the Millenium drought shows that the lake was significantly drier during the drought, never exceeding 50% capacity. The very sharp drop at the beginning of the SAD curve indicates rapid drying when the lake was near its peak, suggesting either that the lake bed is not steep at the point where it is 50% full, or that the only significant influx of water into the lake was during a period of rapid drying.

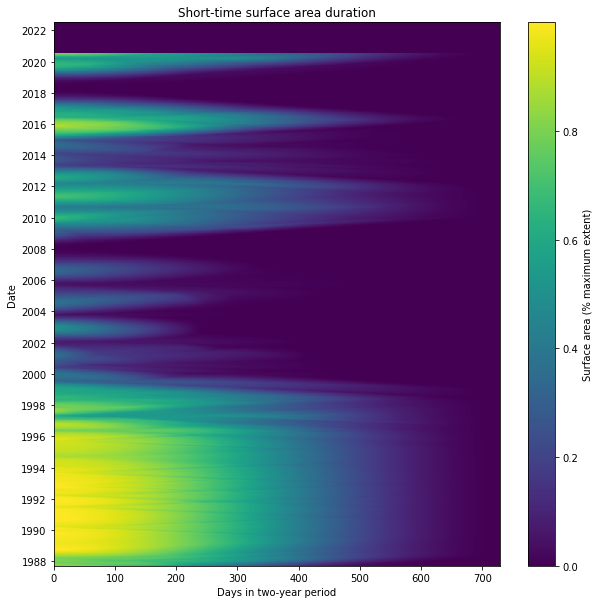

[16]:

plt.figure(figsize=(10, 10))

plt.pcolormesh(sad_x, sad_y, sads)

plt.xlabel("Days in two-year period")

plt.yticks(year_start_days[::2], year_start_years[::2])

plt.ylabel("Date")

plt.colorbar(label="Surface area (% maximum extent)")

plt.title("Short-time surface area duration");

/tmp/ipykernel_186/3543713553.py:2: MatplotlibDeprecationWarning: shading='flat' when X and Y have the same dimensions as C is deprecated since 3.3. Either specify the corners of the quadrilaterals with X and Y, or pass shading='auto', 'nearest' or 'gouraud', or set rcParams['pcolor.shading']. This will become an error two minor releases later.

plt.pcolormesh(sad_x, sad_y, sads)

The STSAD plot helps visualise the change in Weereewa/Lake George over time. Here, the Millenium drought is clearly visible between 2000 and 2009 as a period of very little wet extent. The lake was mostly full during the 1990s, and it filled and dried fairly consistently over time, indicating that it is unregulated. It was stably partly-filled for significant parts of 1988, during the 2010–12 La Niña, and 2016–17.

Additional information

License: The code in this notebook is licensed under the Apache License, Version 2.0. Digital Earth Australia data is licensed under the Creative Commons by Attribution 4.0 license.

Contact: If you need assistance, please post a question on the Open Data Cube Discord chat or on the GIS Stack Exchange using the open-data-cube tag (you can view previously asked questions here). If you would like to report an issue with this notebook, you can file one on

GitHub.

Last modified: December 2023

Tags

.

Tags: NCI compatible, DEA Waterbodies, data visualisation, time series, water